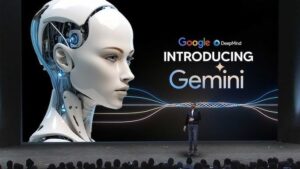

Google’s ‘Gemini 2.0 Flash Thinking’ Model Unveils Its Reasoning Proces

To address the AI ‘black box’ problem, Google has introduced a new AI model called “Gemini 2.0 Flash Thinking,” designed to explain its reasoning process alongside generating responses. The model is currently available as an experimental feature in Google AI Studio and through the Gemini API.

According to Jeff Dean, chief scientist at Google’s AI research team DeepMind, the model delivers “promising results” when given more time for inference — the period it takes the model to generate predictions based on input data.

Google isn’t the first to release a reasoning-focused AI model. Earlier this year, OpenAI launched its own thinking model, O1, which also showed improved performance when allowed more time for reasoning.

How the Model Works:

Google claims that its “Gemini 2.0 Flash Thinking” model is “trained to use thoughts in a way that enhances reasoning capabilities.” The model can understand code, solve math problems, grasp geometric concepts, and generate questions tailored to specific knowledge levels.

For instance, if a user asks the model to create questions for a US Advanced Placement (AP) Physics exam, it first identifies key topics typically covered in such a course. It then constructs scenarios where relevant concepts apply, specifying any exam-related assumptions it made. Before presenting a question to the user, the model reviews both the question and its solution for accuracy.

Currently, users can input up to 32,000 tokens into the model, while its responses are limited to 8,000 tokens. Input formats are restricted to text and images, while outputs are provided only in text format.

Why It Matters:

One of the major concerns raised by regulatory bodies worldwide is the lack of transparency in AI models’ decision-making processes. This ambiguity has led to concerns about the accuracy and fairness of AI-generated outputs.

To address this, governments have been exploring measures to increase transparency. For example, earlier this year, India’s Economic Advisory Council to the Prime Minister (EAC-PM) recommended that the government allow external audits of AI models’ core algorithms through open licensing. The council also suggested creating AI factsheets to support system audits by independent experts, aiming to “demystify the black box nature of AI,” according to a MediaNama report.

However, the council’s recommendation focused solely on creating fact sheets detailing coding/revision control logs, data sources, training procedures, performance metrics, and known limitations. In contrast, OpenAI and Google’s reasoning models take transparency a step further by revealing the AI’s actual reasoning process. This approach allows users and auditors to understand not only how companies build and train their models but also how a model arrives at specific conclusions. As governments worldwide work on AI regulations, the ability to demonstrate clear reasoning processes could help companies comply with emerging standards for AI explainability and accountability.